From Wikipedia to ChatGPT

The cycle of knowledge anxiety

1.

There was a period in the aughts, not long after Wikipedia’s rise in popularity, when a debate began on the meaning and relevance of Wikipedia. How could a source that could be edited by anyone be a reliable source of knowledge?

Traditionalists were appalled that something like Wikipedia could replace the hallowed Encyclopaedia Britannica. Could we trust material that anyone could edit? What would such unverified content do to our minds? Others wrote about the wisdom of the crowd, the self-correcting nature of the Wiki model.

Cultural debates often fizzle out, and this one was no exception. People stopped arguing whether Wikipedia was precise, correct, truthful, or expert-verified. Those who found it met their specific needs adopted it. Nowadays we simply use Wikipedia knowing that this source could be wrong, but it can be useful nonetheless. A fact-checking editor at the New Yorker may not take a Wikipedia page at face value, but someone looking for a quick summary on a subject will find it a great place to start, and perhaps even end.

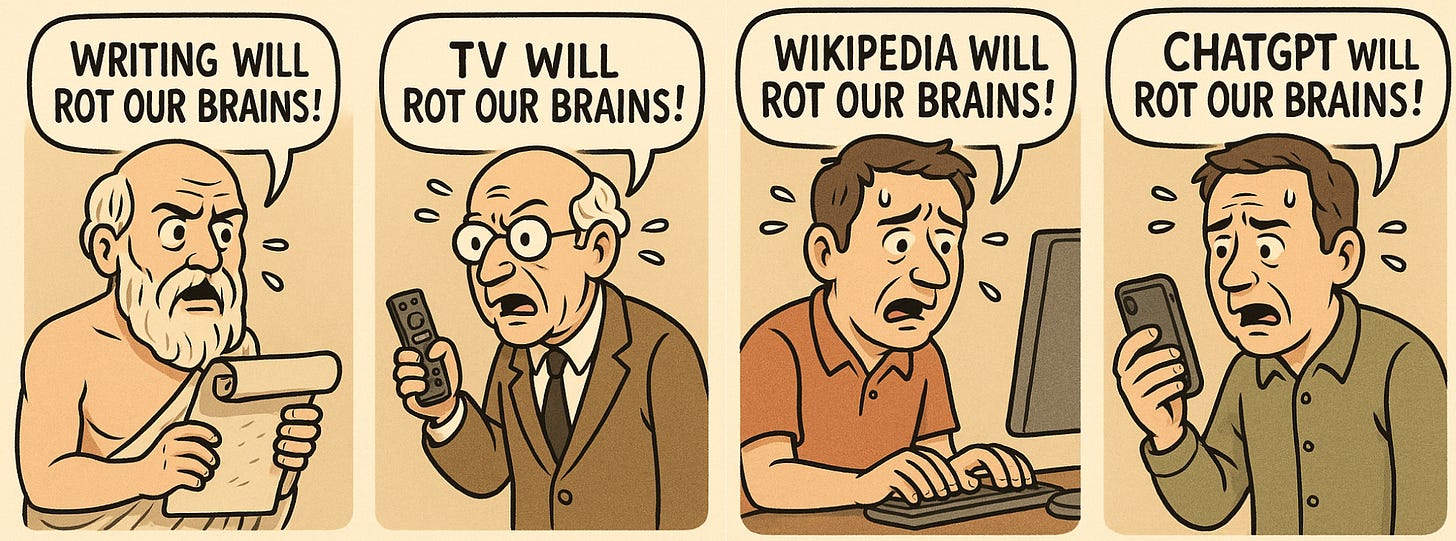

A similar debate is presently taking place about GenAI. The parallels are unmissable: the debate (war, for some) is in the domain of knowledge; truth, correctness, and verifiability are key battlegrounds; and we worry if this — Wikipedia then, GenAI now — will dilute our understanding, corrupt our thinking.

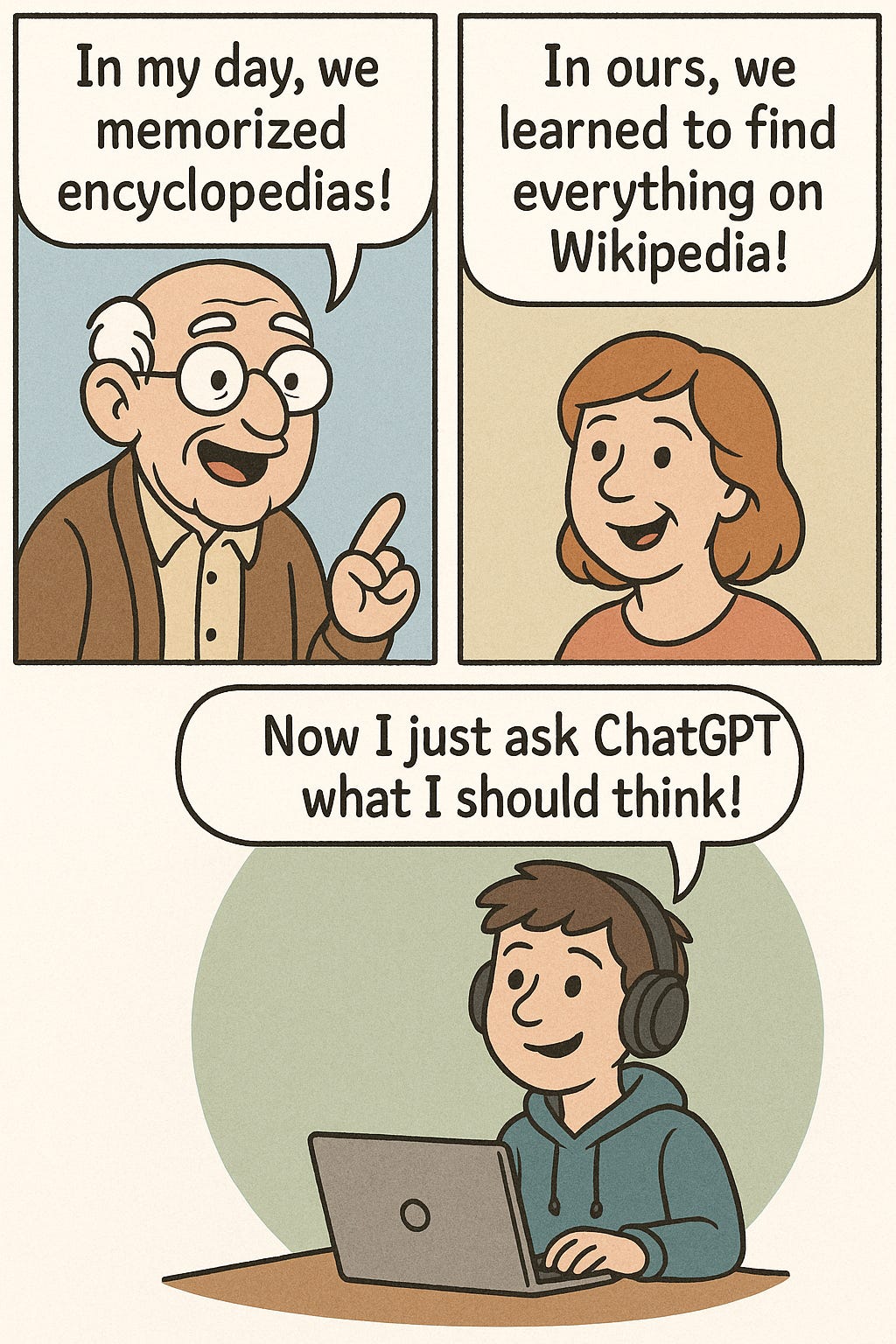

Perhaps it is the knowledge bit that triggers this anxiety. Knowledge is what makes us human, lays the foundation for progress. And anything that upsets established ways we assimilate, absorb, and disseminate knowledge disturbs us, leads us to wonder if we are losing what makes us who we are. Writing, print, the calculator, the computer, the internet (and in that larger context, Wikipedia), and now GenAI: the debate is not new.

2.

Wikipedia, in the last couple of decades, has altered how we source, store, and assimilate knowledge. By creating a vast, always-accessible repository of information, Wikipedia fosters a reliance on externalized memory: we now increasingly remember how to find information rather than the information itself. The good news is that we’ve evolved from memorizing facts to critical evaluation and contextual understanding. This ease of access, however, creates an illusion of explanatory depth, leading us to overestimate comprehension without truly internalizing complex knowledge.

Wikipedia symbolizes a broader societal shift toward valuing interpretation and synthesis over memorization and learning. GenAI (a dynamic Wikipedia?) rides on this wave and pushes us further along this spectrum: we are all generalists now, honed with the ability to quickly cull information from multiple sources and analyse (with the help of AI) vast troves of data unimaginable a couple of decades ago.

3.

The Wikipedia comparison has its limits, but it brings up an interesting question: will GenAI follow the same arc of normalisation?

At the workplace, the normalisation is already underway. Many colleagues I speak to are using GenAI on a daily basis, to draft emails, review concept notes, brainstorm ideas, analyse data, and conduct research. Some worry if this will blunt their critical thinking skills, others wonder what the technology will do to their jobs and the business we are in. But such concerns do not stop these colleagues from using GenAI: I see it getting integrated into most routines at work, not unlike the way email became indispensable to office work in the nineties.

On the personal front, my experience and what I hear from friends follows the same pattern: we are using it for small tasks, from double-checking a medical diagnosis, exploring trip options, comparing alternatives before a purchase, to troubleshooting a problem at home or seeking guidance on personal matters.

So GenAI is already being woven into the fabric of our information-centered lives. And unlike Wikipedia, a source we accessed perhaps a few times a week, GenAI has become an always-on companion we turn to many times each day. The difference, though, runs deeper than the frequency of usage.

GenAI operates in the liminal space between human and machine intelligence, making it difficult to establish clear boundaries of trust and verification. Unlike Wikipedia, where we can theoretically trace back to sources and contributors, GenAI presents us with a kind of knowledge that emerges from an opaque synthesis of training data. It speaks with authority about subjects it has never experienced, offers insights drawn from patterns it cannot fully explain, and demonstrates a kind of artificial intuition that feels both impressive and hollow. We find ourselves in the strange position of evaluating the thoughts of something that cannot actually think, yet produces outputs that can match our own reasoning.

Going by how the past couple of decades have played out, it’s easy to imagine GenAI leading us to cede control of how we consume and create knowledge, accelerating the process that began with the dopamine-inducing social media algorithms.

The new normal, it seems, won’t be anything like the normalisation of Wikipedia-driven fact-finding.

4.

It’s hard not to think of the irony playing out in this context: Wikipedia, once the target of skeptics challenging its trustworthiness, is now the trusted alternative to its new and hallucinating cousin, ChatGPT. And the relationship between the two is both complicated and promising.

Wikipedia's curated, human-verified content will grow more precious as the internet fills with AI-generated slop. The platform's human oversight, citation requirements, and collaborative editing model provide quality assurance that pure AI systems cannot match. Wikipedia may evolve into one of the few remaining "clean" sources of knowledge.

Knowledge not just for humans, but for AI too. The Wikimedia Foundation recently announced it is partnering with Google-owned Kaggle to release a version of Wikipedia optimized for training AI models, starting with English and French, offering stripped down versions of raw Wikipedia text.

And Wikipedia could benefit from GenAI if the latter influences its editorial processes—helping with translation and content discovery or synthesis.

Wikipedia's strength lies in its human verification and collaborative fact-checking, while AI excels at synthesis and accessibility. Their combination could create a more powerful knowledge system than either could achieve alone, but the downside of this interplay is that Wikipedia risks contamination from AI-generated (or assisted) edits.

The influence of two sources, independently and in tandem, on the sphere of knowledge is an interesting space to watch. The anxiety, I suspect, will not go away.