Collaboration and Caution: Glimpses from an AI Cohort Program

Reflections from a 2-day workshop with an NGO cohort working on AI

Earlier this summer, Project Tech4Dev and The Agency Fund launched an AI Cohort Program to support 7 NGOs with the AI-based solutions they were interested to build and deploy. The program intended to address typical challenges NGOs were facing in the context of their AI initiatives: limited funding for experimentations, in-house tech/AI capacity gaps, a lack of structured entry points and approaches to explore or prototype AI use-cases.

Each NGO was assigned one or two mentors, tasked with guiding and supporting the NGO team through the program. The program also included knowledge partners like Digital Futures Lab and Tattle, contributing with their expertise in some orthogonal topics like Responsible AI or AI safety.

I was linked to Simple Education Foundation (SEF), a nonprofit focussed on supporting government school teachers with better strategies for student development. Our use-case involved building an AI-powered WhatsApp tool to provide teachers with ongoing, contextual support and resources, while also enabling regular reflections.

After about a month of virtual meet-ups, the cohort came together last week for a 2-day workshop in Bangalore. A summary of the two days is available here and here.

What’s been striking about this AI wave is not just the speed of technology advancement but also the pace at which people are evolving strategies to deal with the effects of this new technology.

Beyond the individual team huddles, the workshop created a space for the seven NGO teams (with their mentors) to listen to each other’s use-cases and approaches. Unsurprisingly, overlaps or synergies were discovered, and we aligned on how to learn from each other (or reuse parts from already implemented solutions). For instance, the work done by Quest Alliance and Avanti Fellows in the area of teacher-assistance-bots was something that’s relevant for our Simple Education Foundation use-case .

These early days of building with AI brings to mind some earlier tech waves we’ve been through in the last couple of decades. The internet/web era, and within that larger wave the mobile revolution as well. In their infancy, those waves also spawned a large number of applications, and it took time for the industry to mature and come up with better (i.e more efficient, responsible, safer) ways to use the technology.

I’ve written about how the internet era compares, along some broad themes, with this AI one. What’s been striking about this AI wave is not just the speed of technology advancement but also the pace at which people are evolving strategies to deal with the effects of this new technology.

The AI Cohort workshop brought forward some of these strategies, in the form of presentations from the knowledge partners.

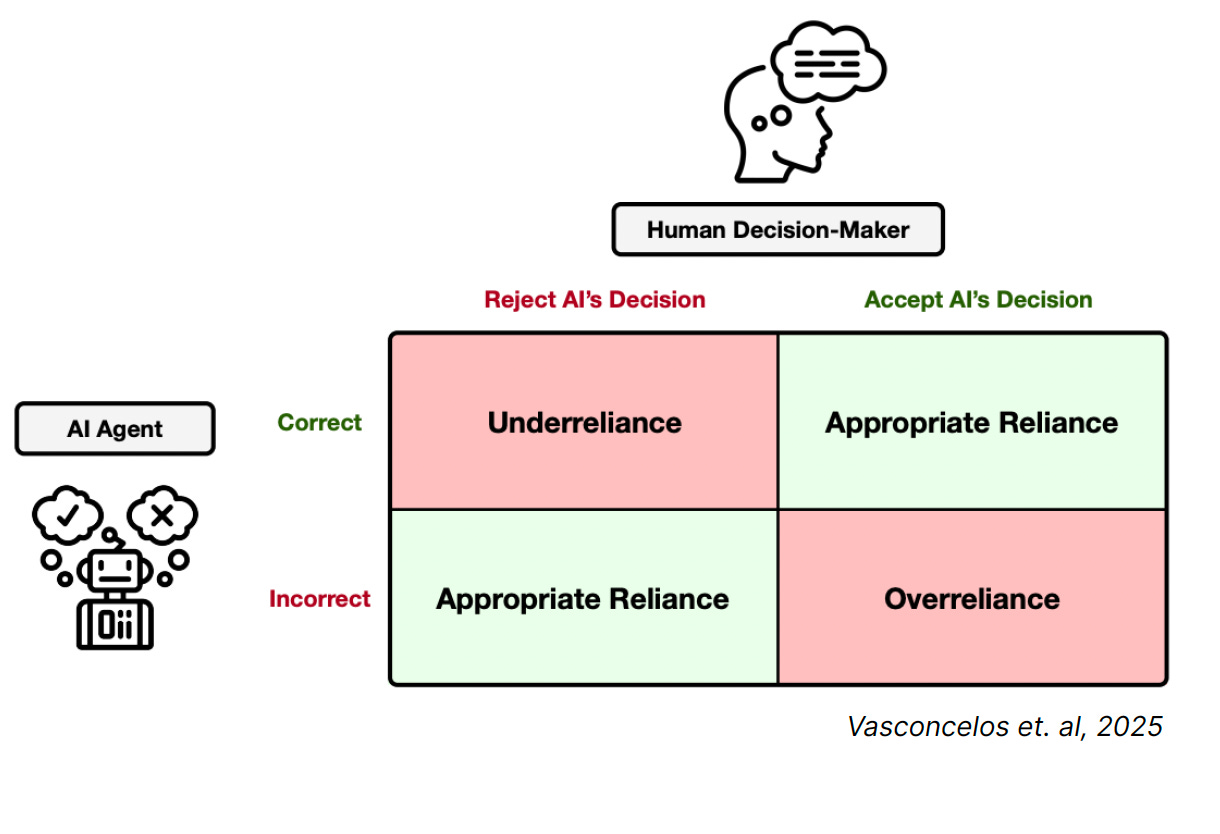

Digital Futures Lab, an “interdisciplinary research studio that studies the complex interplay between technology and society” held a 2 hour workshop on “Over-reliance on AI”. The session explored the causes, risks, and real-world impacts of over-reliance on AI. We also discussed practical strategies to prevent and mitigate it through positive friction and user-centered design.

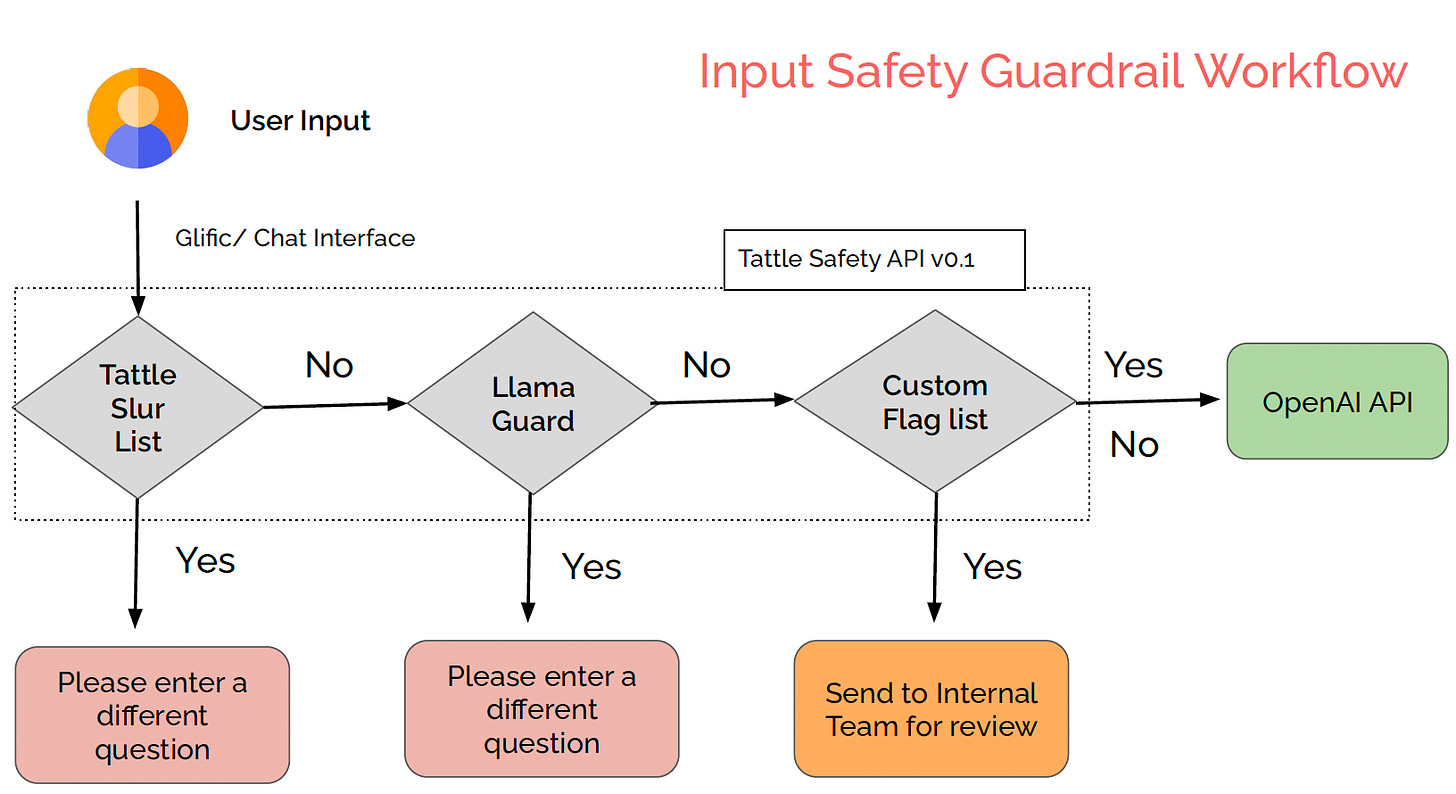

Tattle, “a community of technologists, researchers and artists working towards a healthier online information ecosystem in India” ran a session on AI Safety Guardrails. It introduced the ABC Model of Platform Safety, focusing on analyzing content, identifying influential or malicious actors, and analyzing user behavior for unsafe engagement. The session also delved into technical implementations, including lexical and semantic analysis and the application of Llama Guard for input-output safeguards in human-AI conversations. Privacy and copyright considerations were also discussed, distinguishing between private and scraped data. (Their Uli browser plugin includes a “Tattle slur list” to help “moderate and mitigate online gender based violence.”)

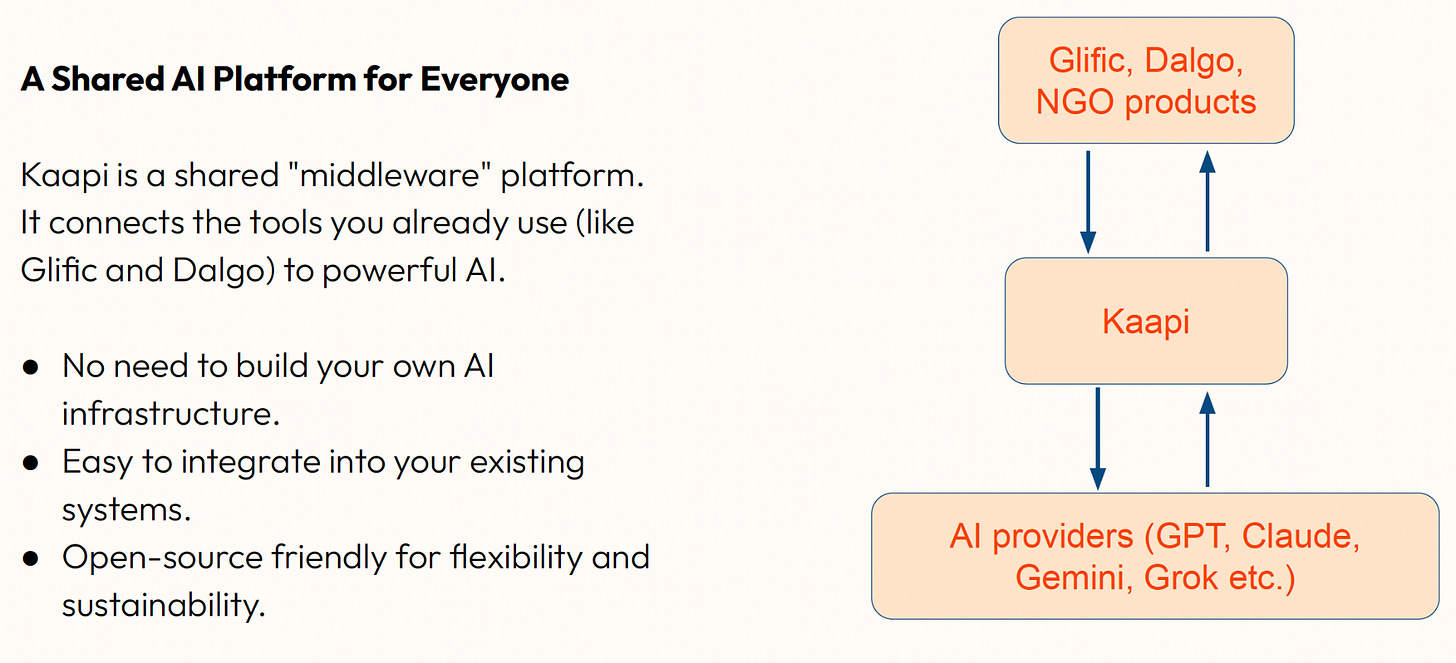

There was also a presentation from Tech4Dev on their Kaapi platform, a shared AI platform for the development sector that aims to simplify AI adoption for NGOs by providing a middleware that connects existing tools to AI solutions, offering features like a unified API, responsible AI guardrails, evaluation, document management, and multilingual support.

Such initiatives – mitigation strategies, guardrails, safety measures, shared modules – took years to materialize in previous technology waves: the early years of the internet and mobile eras were full of enthusiastic coders and entrepreneurs building stuff, largely independently. Those coders and entrepreneurs are riding this AI wave too (this time with the help of AI to generate parts of their solutions), but it’s refreshing to see a counter-movement of sorts emerge alongside all the enthusiasm for AI. This balancing act has its roots in what we’ve learned in the last two decades of technology adoption and diffusion into society.

The inclusion of these cross-functional aspects is a salient feature of this AI Cohort model defined by Project Tech4Dev, a recognition of the fact that solutions to social problems need to include dimensions that go beyond technology.